-

Notifications

You must be signed in to change notification settings - Fork 474

Closed

Labels

Milestone

Description

Description of Problem & Solution

I want to use the FrameTimecode to instruct ffmpeg process. But the FrameTimecode is different with ffmpeg.

For belowing media, the first 2 scenes detected of command scenedetect -i Blossoms_at_the_Basin.mp4 detect-content list-scenes -n save-images is:

-----------------------------------------------------------------------

| Scene # | Start Frame | Start Time | End Frame | End Time |

-----------------------------------------------------------------------

| 1 | 0 | 00:00:00.000 | 462 | 00:00:19.269 |

| 2 | 462 | 00:00:19.269 | 635 | 00:00:26.485 |

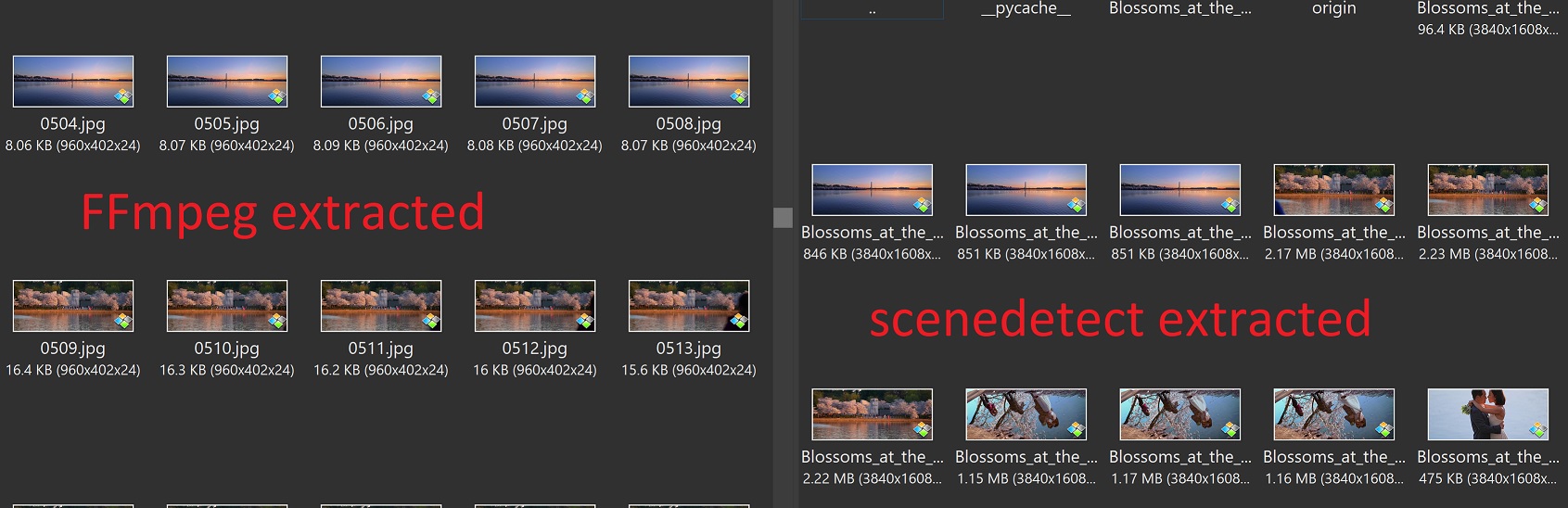

But the actual end frame number of scene 1 is 508 (start from 0), not 462. Look this:

I think the reason is that VideoCapture has dropped frames. I suggest to use PyAV to read frame. Because PyAV can decode frame with index and pts props.

Media Examples:

Blossoms_at_the_Basin.mp4 is the 4K format of https://www.youtube.com/watch?v=WzD_PREISiM

Proposed Implementation:

Here is a demo to read frames with PyAV:

import sys

import av

import cv2

import numpy

from scenedetect.video_manager import compute_downscale_factor

class Video():

def __init__(self, video):

self.video = video

self.container = av.open(video)

self.stream = self.container.streams.video[0]

self.width = self.stream.codec_context.width

def _get_frame_rate(stream: av.video.stream.VideoStream):

if stream.average_rate.denominator and stream.average_rate.numerator:

return float(stream.average_rate)

if stream.time_base.denominator and stream.time_base.numerator:

return 1.0 / float(stream.time_base)

else:

raise ValueError("Unable to determine FPS")

self.frame_rate = _get_frame_rate(self.stream)

def frames(self):

for frame in self.container.decode(video=0):

yield frame.index, frame.to_ndarray(format='bgra')

def compute_delta_hsv(i1, i2):

i1_hsv = cv2.split(cv2.cvtColor(i1, cv2.COLOR_BGR2HSV))

i2_hsv = cv2.split(cv2.cvtColor(i2, cv2.COLOR_BGR2HSV))

delta_hsv = [0, 0, 0, 0]

for i in range(3):

num_pixels = i1_hsv[i].shape[0] * i1_hsv[i].shape[1]

i1_hsv[i] = i1_hsv[i].astype(numpy.int32)

i2_hsv[i] = i2_hsv[i].astype(numpy.int32)

delta_hsv[i] = numpy.sum(numpy.abs(i1_hsv[i] - i2_hsv[i])) / float(num_pixels)

return sum(delta_hsv[0:3]) / 3.0

video = Video(sys.argv[1])

threshold = 30.0

factor = compute_downscale_factor(video.width)

last_frame = None

for index, frame in video.frames():

frame = frame[::factor, ::factor, :3]

if last_frame is None:

last_frame = frame

continue

hsv = compute_delta_hsv(last_frame, frame)

if hsv >= threshold:

print(index)

last_frame = frame